| Strategic Pillar | Executive Insight |

|---|---|

| Algorithmic Greenlighting | Utilizing predictive sentiment models to de-risk early development, reducing speculative capital loss by 40% in 2025 slates. |

| Neural Rendering (NeRFs) | The shift from 3D polygon modeling to neural radiance fields is compressing virtual production cycles by nearly 60%. |

| Supply Chain Governance | Securing machine-generated IP requires proprietary LoRA models and style-locked seeds to maintain visual continuity and copyright. |

| Vitrina Relevance | Surface the vetted AI-native vendors with proven “Hero Project” deliveries and secure data-handling protocols via VIQI. |

Table of Content

- Algorithmic Development: The Displacement of Speculative Greenlighting

- Neural Cinematography: From Lenses to Latent Space

- Automated Post-Production: The Democratization of Visual Fidelity

- Governance & IP Security: Protecting the Machine-Generated Supply Chain

- Vitrina Briefing: Sourcing the AI-Native Production Tier

Your AI Assistant, Agent, and Analyst for the Business of Entertainment

VIQI AI helps you plan content acquisitions, raise production financing, and find and connect with the right partners worldwide.

- Find active co-producers and financiers for scripted projects

- Find equity and gap financing companies in North America

- Find top film financiers in Europe

- Find production houses that can co-produce or finance unscripted series

- I am looking for production partners for a YA drama set in Brazil

- I am looking for producers with proven track record in mid-budget features

- I am looking for Turkish distributors with successful international sales

- I am looking for OTT platforms actively acquiring finished series for the LATAM region

- I am seeking localization companies offer subtitling services in multiple Asian languages

- I am seeking partners in animation production for children's content

- I am seeking USA based post-production companies with sound facilities

- I am seeking VFX partners to composite background images and AI generated content

- Show me recent drama projects available for pre-buy

- Show me Japanese Anime Distributors

- Show me true-crime buyers from Asia

- Show me documentary pre-buyers

- List the top commissioners at the BBC

- List the post-production and VFX decision-makers at Netflix

- List the development leaders at Sony Pictures

- List the scripted programming heads at HBO

- Who is backing animation projects in Europe right now

- Who is Netflix’s top production partners for Sports Docs

- Who is Commissioning factual content in the NORDICS

- Who is acquiring unscripted formats for the North American market

Producers Seeking Financing & Partnerships?

Book Your Free Concierge Outreach Consultation

(To know more about Vitrina Concierge Outreach Solutions click here)

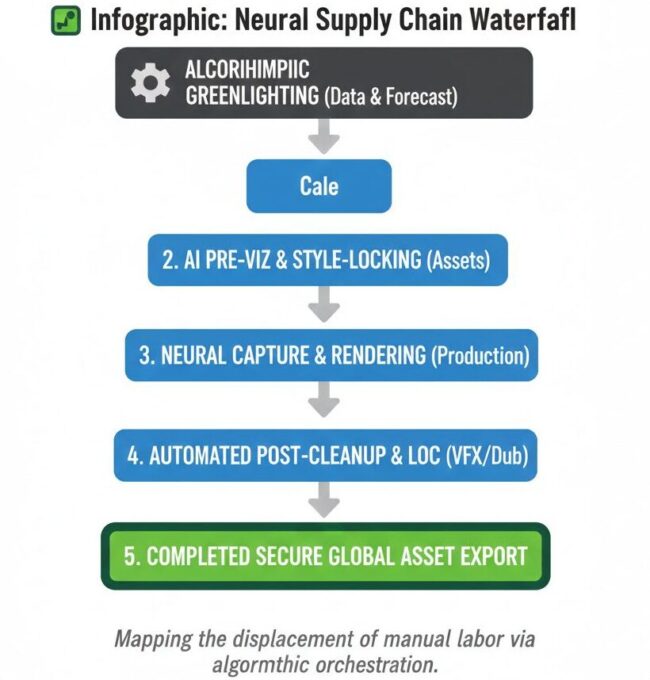

Algorithmic Development: The Displacement of Speculative Greenlighting

The first stage of AI Reshaping the Entertainment Supply Chain occurs months before principal photography. Traditionally, development was a speculative bet based on anecdotal “gut feeling” and past performance. In 2025, algorithmic development has signaled the end of this era. According to market intelligence from Ampere Analysis, over 65% of global streaming acquisitions are now influenced by predictive analytics that simulate audience sentiment before a script is even finalized.

The shift is most pronounced in the Concept-to-Visual phase. AI-driven pre-visualization allows producers to “generate” entire episodes in low-fidelity 3D/2D animation to test narrative flow. This reduces the capital risk of “Development Hell” by identifying structural flaws in the story world at a fraction of the traditional storyboard cost.

The bottom line for financiers: AI is not replacing the writer, but it is de-risking the greenlight. By surfacing competitive slates and licensing gaps via Vitrina’s project tracker, studios can identify “Content Voids” and fill them with AI-accelerated developments that are architected for specific regional markets.

Neural Cinematography: From Lenses to Latent Space

Principal photography is facing its most fundamental transition since the arrival of digital sensors. The mandate to AI Reshaping the Entertainment Supply Chain is now defined by the Latent Space—the mathematical environment where neural networks generate photorealistic visual information. According to data from Omdia, the adoption of Neural Radiance Fields (NeRFs) and Gaussian Splatting in virtual production has seen a 25% CAGR as of late 2024.

Neural rendering allows for Digital Location Scouting where a single 360-degree video can be transformed into a fully navigable 3D environment. This eliminates the need for massive crew movements and high-yield travel logistics. Instead of flying a unit to a remote territory, the environment is “rendered” on a soundstage with photorealistic accuracy that responds to real-time lighting changes.

Professional studios are solving the Uncanny Valley problem by using AI Performance Capture—a system that enhances the micro-expressions of actors in post-production, ensuring emotional resonance while maintaining the technical efficiency of a digital pipeline. This allows for de-aging and visual performance editing that was previously cost-prohibitive for indie features.

Automated Post-Production: The Democratization of Visual Fidelity

The VFX industry has historically been the bottleneck of the entertainment supply chain, characterized by thousands of artists performing manual rotoscoping and plate cleaning. The AI Reshaping the Entertainment Supply Chain movement has effectively automated these tasks. According to industry reports from Variety, AI-powered rotoscoping tools have reduced the labor hours for complex “clean-up” shots by nearly 85% in the last 12 months.

This democratization allows mid-budget indie productions to achieve “blockbuster” visual fidelity. By utilizing generative fill and depth-aware AI models, a small team can now execute shots that would have required a Tier-1 VFX house just three years ago. This shift is forcing a massive reallocation of BTL (Below-the-Line) budgets.

The outcome is a faster time-to-market. Episodic series can now maintain higher visual complexity without extending the post-production calendar. For distributors, this means shorter windows between production wrap and global release, maximizing the “hype cycle” and reducing the impact of piracy on theatrical and streaming windows.

Governance & IP Security: Protecting the Machine-Generated Supply Chain

As AI takes on a larger role, the legal architecture of the industry must adapt. The primary agitation for studio legal departments is the Ownership Void. Currently, purely AI-generated works lack human authorship and are ineligible for US Copyright Protection. Strategic governance now requires a Hybrid Pipeline.

Professional studios must document the Human Intervention at every stage—from specific prompt engineering to manual frame-by-frame retouching. This documentation serves as the audit trail for “Authorship,” ensuring that the final output is a legally protectable asset. Furthermore, Style-Locking via proprietary LoRA models ensures that the AI “knows” the brand’s aesthetic, preventing visual drift across episodes.

Supply chain integrity is also paramount. Using Vitrina, executives can identify vendors who offer Clean Data models, de-risking the project from potential copyright infringement lawsuits related to training data. The market is currently signaling a premium for vendors who can prove their AI models were trained on licensed or proprietary libraries rather than scraped web data.

Vitrina Briefing: Sourcing the AI-Native Production Tier

Mastering AI Reshaping the Entertainment Supply Chain is ultimately a supply-chain challenge. You cannot execute an AI-first project with a legacy vendor list. Vitrina de-risks this transition by mapping the global ecosystem of AI-native studios—companies built “AI-first” with proven track records in asset persistence and neural rendering.

Use these contextual prompts to signal your requirements and surface the collaborators who can execute your machine-accelerated slate:

Accelerate Your AI-Native Discovery with VIQI

Strategic Conclusion

The mandate to follow AI Reshaping the Entertainment Supply Chain is not a creative threat; it is an economic invitation. By shifting the production burden from manual frame-by-frame labor to algorithmic orchestration, studios can unlock a volume of high-fidelity content that was previously financially impossible. The 60% compression in development cycles and the democratization of high-end VFX allow for more daring, diverse, and niche stories to reach a global audience without the “Blockbuster or Bust” capital risk.

The path forward requires a transition from “Maker” to “Showrunner-as-Editor.” As the barriers to high-end visuals drop, the value of the Original IP and the Verified Supply Chain rises. Success in the AI-accelerated era is reserved for those who can identify the right technical collaborators early and secure their “Creative Engines” behind proprietary firewalls. Vitrina provides the verified metadata and executive-level connections necessary to transform AI’s promise into a high-yield, structurally sound production reality.

Strategic FAQ

How is AI actually reducing entertainment supply chain bottlenecks?

AI addresses bottlenecks by automating high-frequency manual tasks like rotoscoping, background plate clean-up, and localization dubbing. This reduces post-production turnaround times by up to 70%, allowing for simultaneous global release slates.

What is a “Style-Locked” AI workflow?

A style-locked workflow utilizes proprietary training data and LoRA (Low-Rank Adaptation) models to ensure that AI-generated visuals maintain character and aesthetic continuity across every frame and episode, preventing the “drift” common in open-source models.

How do studios secure copyright for AI-generated film assets?

Studios secure copyright by implementing a “Hybrid Pipeline” where human creators provide substantial creative input. Rigorous documentation of prompts, manual edits, and skeletal guidance serves as the proof of authorship required by patent and trademark offices.

What is neural localization in distribution?

Neural localization uses AI-native voice cloning and lip-sync technologies to dub content into multiple languages while preserving the original actor’s vocal performance and emotional nuance, lowering dubbing costs by nearly 80%.